I don’t get a lot of time to explore the world of machine learning (LLMs, Stable Diffusion, etc) so the biggest motivation was just the learning experience. Aside from that, I used a few other LLM frontends like dalai and wasn’t satisfied with the experience for a few reasons:

- Each chat is a separate instantiation of the model. Chat context is provided by manually pasting your previous message history into the chat.

- It wasn’t easy to plug an unsupported model into the frontend. You would need to find and download the models and copy them to the right place in the filesystem.

- You had to manually write warm-up prompts and any prefixes or suffixes you wanted to use in front of each chat message.

Originally, I started this project with egui to learn the framework, but there was going to be enough custom UI stuff that I didn’t want to take the time to write the painting code for these elements. So I quit that and explored Tauri which (so far) has been a great experience to use.

The models are executed by rustformers/llm which is generally pretty good, but I’ve run into some issues with the M1 Metal implementation for example, so I’ve been considering abstracting away the backend so that I could plug in llama.cpp or something else if I wanted to.

The frontend is written in React and uses the Mui JoyUI component library.

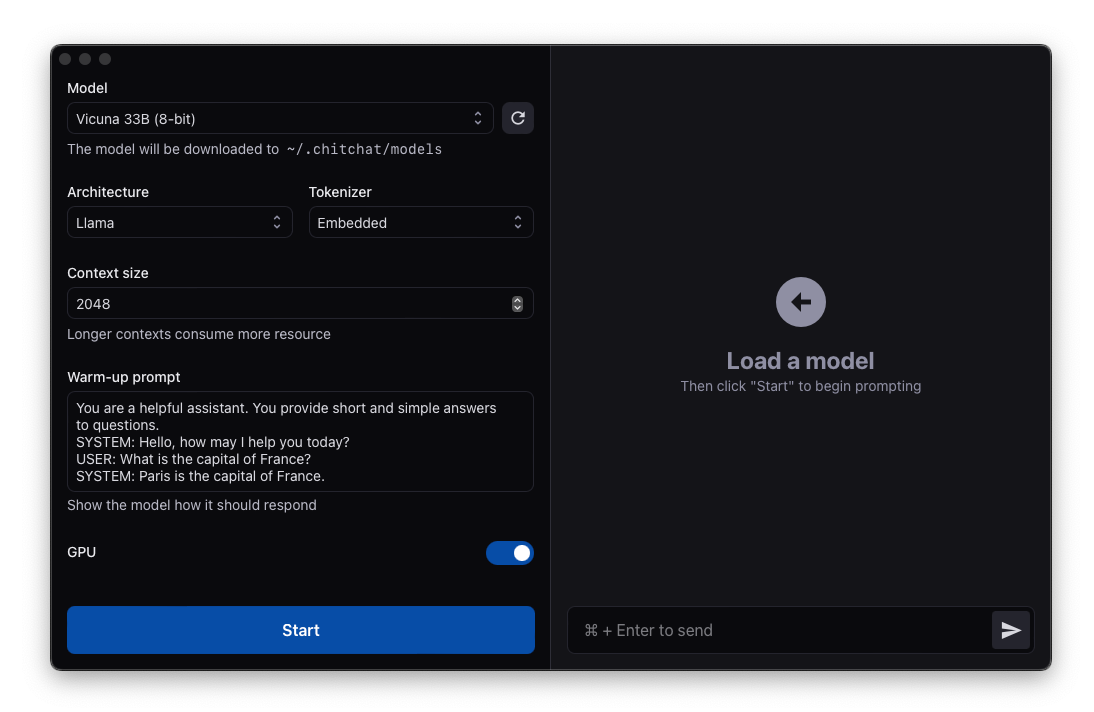

- Many models are built-in to the app, and will automatically be downloaded if they’re not available already

- New models can be built-in to the app by adding them to a manifest file

- Unsupported models can be used by dropping them into the

~/chitchat/modelsdirectory - Use warm-up prompts to prime the model before chatting

- Chat sessions are persisted between messages so the model is contextually-aware of the conversation

- GPU support

- Cross-platform

- Support LoRAs

- Support more models out-of-the-box

- Extensions

- UI bug improvments

- Additional backends (like llama.cpp)